A CMO’s Guide to GEO/AEO

This is what CMOs need to know about GEO/AEO and its relationship with SEO.

Whether you are a CMO or report into one, this guide is for you. It’ll help to cut through the noise of what GEO/AEO is and how it differs from SEO.

I recently did a podcast where I mentioned NOVOS sits in between two worlds: the SEO world and the eCommerce world.

In the SEO world, GEO/AEO is not a thing; it’s very much seen as an activity within an SEO’s remit, which is just an adaptation of content for the AI era.

In the eCom world, GEO/AEO is very much still a thing, as is the case across broader marketing industries.

So in this post, I’ve focused more towards the eCommerce world. If GEO stays, this is how you should be viewing it as an eCommerce leader.

By the end of this post, your takeaways should be:

‘GEO’ is just a small but important part of getting ranking in GPT, Google AI, etc

Without SEO rankings, there’s no GEO visibility

A broader understanding of how LLMs retrieve information/content and give answers

Below is a screenshot from a Google rep which tells you what you need to know. Either way, let’s break it down more.

What is GEO/AEO?

In case you don’t know OR if you use one of the many other acronyms available, e.g. AIO, GXO, AEO, oLLM, or GEX (only one of these is made up…) - what exactly is GEO?

GEO stands for Generative Engine Optimisation, referring to optimising for AI-driven search results (ChatGPT, Perplexity, Gemini, etc.). The narrative you may hear is (particularly if your only ‘knowledge building’ is through LinkedIn) that ‘SEO’ is once again dead and GEO is the new channel to focus on.

This isn’t the case; the element referred to as ‘GEO’ is just an adaptation of how SEO works with this new technology.

Where Does GEO Fit in with SEO? Is There a Difference?

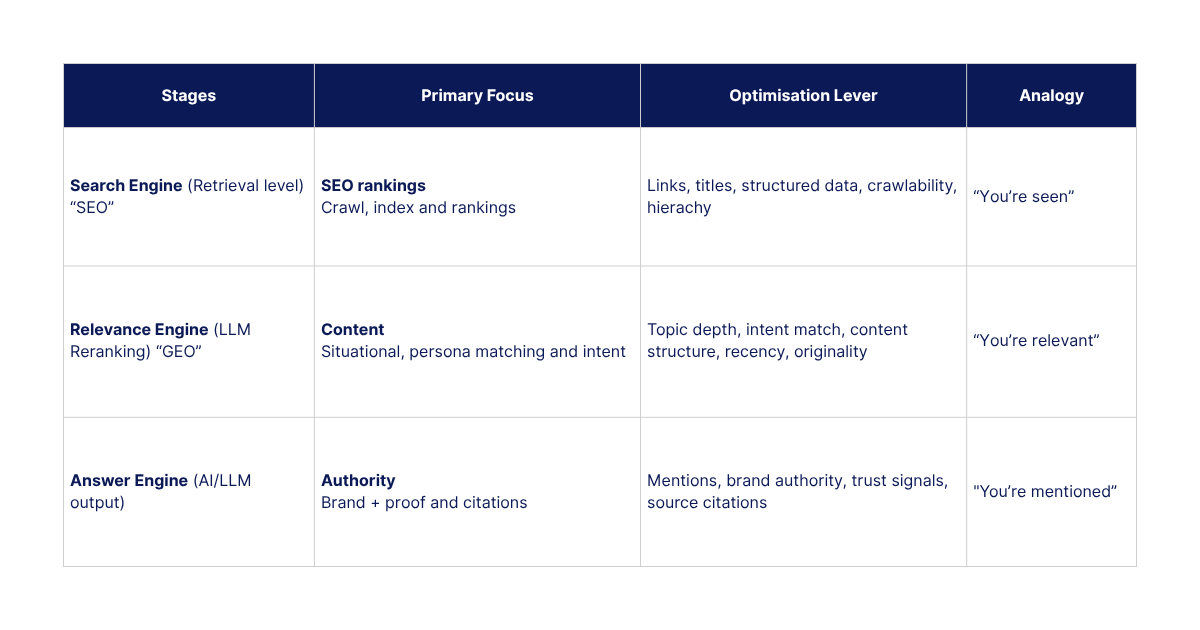

We’ve simplified how to visualise GEO vs SEO in this table:

This table takes influence from the RAG model that outlines how LLMs broadly collect and serve answers:

Retrieval of information

Augmentation of context from request to information

Generation of an answer based on the best, relevant source

Note: There are stages before this, including the stored knowledge provided to an LLM called its parametric memory, but we don’t need to go into that level of detail for marketing.

Stage 1: Your Brand is Seen

This is retrieval. LLMs need a source to collect information; as it stands, they are using a combination of Google and Bing rankings.

Search results are already a prioritised list of the best, most relevant content online, so why would you not use it as a reference point?

GSC impressions have been a mess this year for this reason: an aggressive crawling of Google results increases impressions from these LLM bots. Google partly responded by not allowing 100+ pages to be crawled and extracted at the same time, and limited it to 10.

So, in the background, these LLMs are performing searches based on the probability of what the searcher will ask for next. See Google’s product lead Steins’ quote below:

Actions:

If you don’t have good SEO rankings, the LLMs won’t retrieve your website

You won’t be a source within the retrieval process, and therefore, the next 2 stages are redundant

Focus on better SEO rankings by improving technical foundations and internal linking

LLMs still struggle with JavaScript, so if you are headless or adapt JavaScript anywhere on the site, the LLMs will struggle to read it unless it’s server-side rendered

You’ll need a clear content strategy to target niche terms your audience is searching for, and put a high emphasis on intent. If you are writing an informative led article, but the search results are all commercial, it won’t rank - think of this as user experience for content

Finally, once on-site is in a good place, you need backlinks - Google still relies massively on backlinks, this is the key part that’s very difficult to do and therefore creates a much higher competitive advantage for your SEO performance, ie competitors can copy your content but they can’t copy your backlinks, it takes a very long time.

Stage 2: You’re Relevant

If you are focused on the term GEO/AEO, then this is where this comes into play.

This is the stage where LLMs will match it’s retrieved information from stage 1 and match the sources to the prompt based on relevancy.

It can be viewed as a ‘reranking’ stage based on stage 1. LLMs refine the shortlist using context and intent.

A key tool in an SEO’s arsenal here is called query fan out mapping (which is referred to in the Google quote above).

How Query Fan-Out Works

AI Search issues dozens of background searches per query, using Google Search as a tool to fetch real-time data and evaluate quality signals. As part of our work with clients, we replicate this modelling to predict what the user would search for next.

Takeaways:

SEO results provide the search index. LLMs provide the brain - ie it decides the relevancy and how to summarise/rephrase for the searcher.

The content strategy referenced in the retrieval stage needs an extra level of depth, use traditional SEO data to inform priorities of where your brand has existing success and then match this with your personas.

AI search long tail brings out clear contextual needs of each persona in their prompts/search terms (a key differentiator to traditional keywords from stage 1).

This persona layer is what satisfies the LLM’s need for intent matching, providing content for the persona’s need gives the LLM content to match to their desired intent

Example: Traditionally, an SEO would focus on a “Sofa buying guide.” Now you need greater depth, matched to your personas’ specific needs. This takes content to a new level - such as buying guides tailored for families with toddlers, first-time renters, apartment dwellers, hosts who want a statement piece, or design enthusiasts seeking niche, trend-defying furniture from influential designers.

Actions:

Use contextual cues, e.g. “for busy families”, to help LLMs match relevance. This includes your audience for content as well as products, e.g. “for beginners”, “for CMOs”, “for families with toddlers”

Feed models structured data, e.g. FAQs and summaries

You win at this stage by being the clearest, most complete and most contextually relevant answer - not by keyword tricks.

Cover the follow-up questions - we call this query fan out mapping. What would the readers’ next questions be, e.g. costs, timelines, pitfalls, pros/cons, and how it works?

Cite your own sources next to any claims

Add Schema data around how people ask, e.g. on PDPs: products + offer + return policy + offer shipping details

Focus on 1 intent per URL

Use internal linking for additional context, e.g. not ‘here’ but ‘our sofa buying guide here’

PDP Example: Hiking backpack for train travel across Europe

Answer capsule: who’s it for, capacity (20-60L), weight, materials, access, fits overhead racks on EU trains

Spec table: litres, weight, torso fit, laptop sleeve size, rain cover, warranty

Fit/not fit: commuters & weekend hikes vs multi-day trekking

Comparison table: your model vs 2 close alternatives

FAQs: Cabin size on Eurostar / Waterproof / Back panel venting / carry on rules

Schema: product, offer-shipping-details, merchant-return-policy, aggregate-rating

Stage 3: Your brand is mentioned

The ultimate goal of AI search is to be cited as a source in the answer. This builds brand visibility but more importantly credibility, and trust (which ads can’t do).

It eventually pulls users through the funnel. If you’ve completed stage 2 correctly, you’ll be mentioned again when users ask follow-up questions mapped in your query fan-out activity.

But here’s what most people miss about LLM ranking.

At this stage, the LLM has still retrieved and evaluated hundreds of relevant results.

Your direct competitor could read this and complete stages 1 and 2 just as well as you.

The true differentiator and competitive advantage comes from offsite work - digital PR and brand building.

At this stage, it’s called citation building rather than link building.

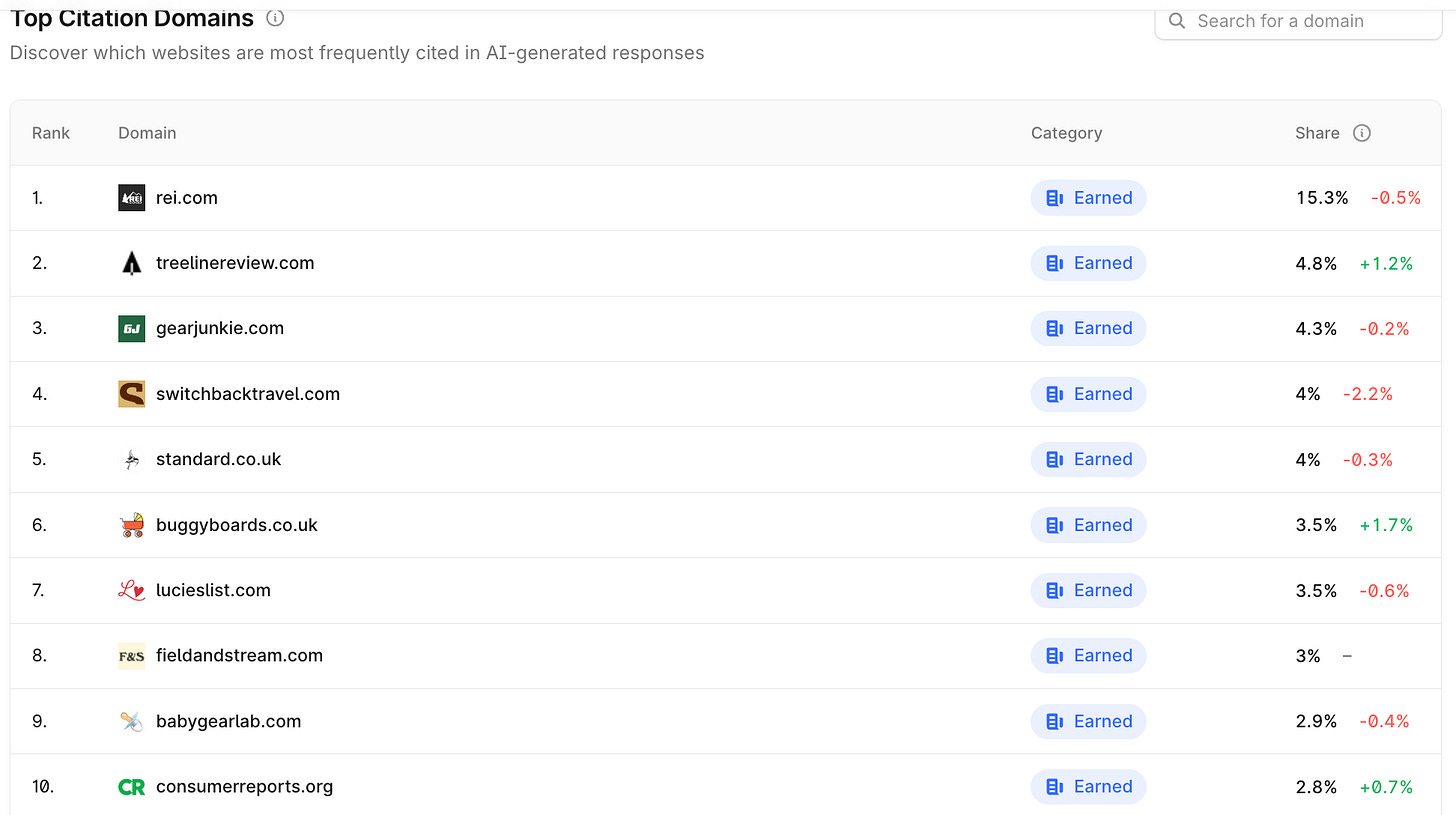

Each LLM model has a set of sites it considers authoritative sources. Over time, these lists will likely diverge more.

What does this mean for you?

To improve Perplexity rankings for relevant prompts, you need coverage on publications it deems relevant and authoritative.

This prospect list will differ from ChatGPT, Gemini, or Grok (if that’s your thing).

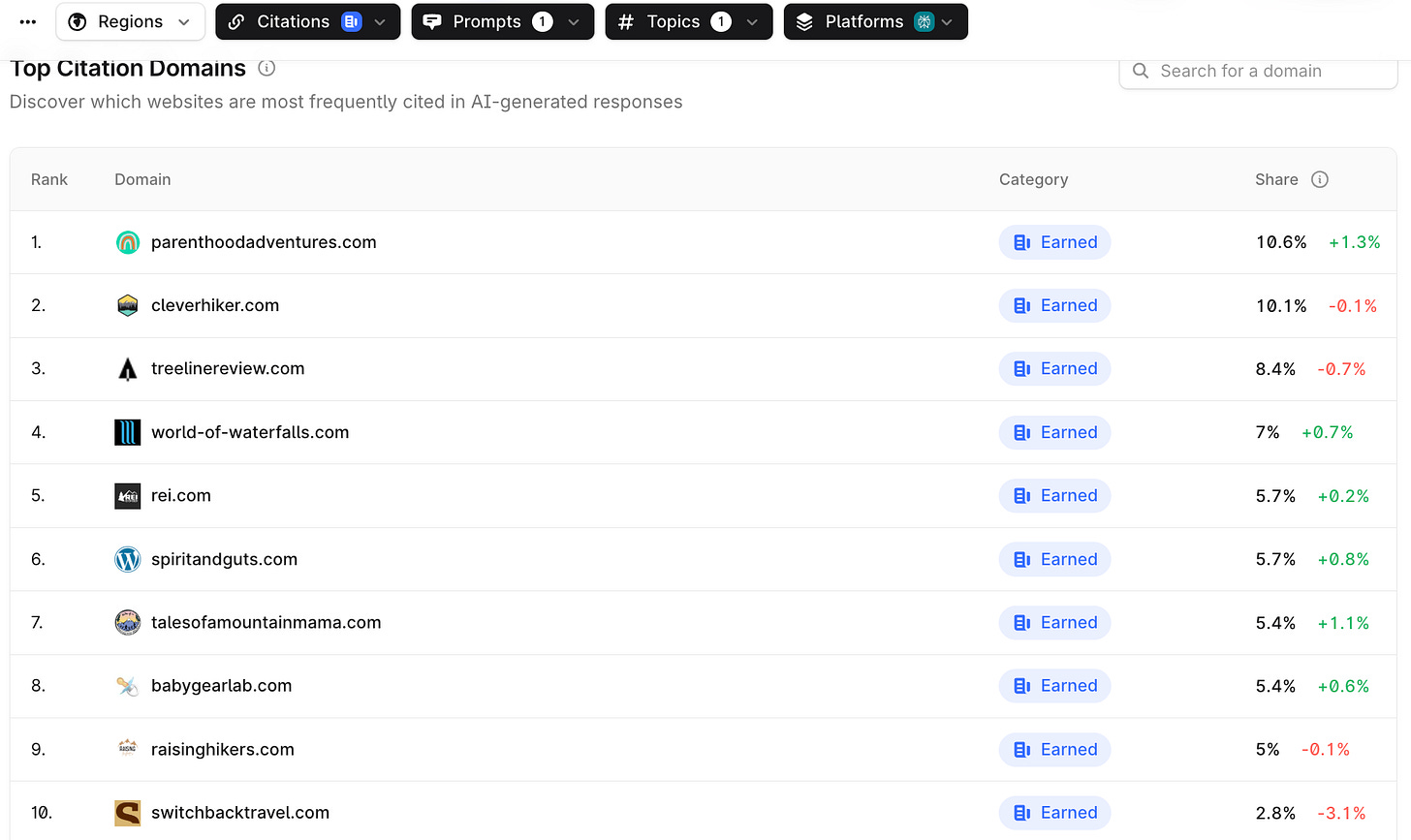

For example, when looking at the topic of Children’s Carriers, notice the difference between sites ChatGPT views as authoritative versus those Perplexity prioritises. As I mentioned, I expect this divergence to grow over time as each platform advances and develops its own quality guidelines.

GPT

Perplexity

Summary

AI has changed the search interface and encouraged more complex queries, but the core ranking factors remain the same—expertise and authority.

Google calls this an “expansionary moment.” People are asking far more questions now, particularly around advice, how-tos, and complex needs rather than simple queries.

Instead of optimising for isolated keywords, brands should anticipate the full intent and informational journey behind the conversational questions their personas ask.

Foundational SEO gets you seen. Then adapt your content strategy for AI search (call it GEO or AEO if you want). But the true differentiator comes from offsite coverage—and this won’t go away because it’s considerably harder to achieve than making changes on your own website.

Digital PR is essential at stage 1 for SEO fundamentals through link building. It becomes valuable again at stage 3, where you need citations on publications that LLM models deem authoritative. There will be overlap, but if you aren’t tracking and adding these sites to your prospect list, you’ll miss out on that competitive advantage and fail to get your brand visible.