Mass Content Creation - Don’t F*ck it up.

Should you dynamically generate content at scale? With the rise of AI, this question has become increasingly common in the eCommerce world, making SEOs nervous. Here's why.

Should you dynamically generate content at scale? With the rise of AI, this question has become increasingly common in the eCommerce world, making SEOs nervous. Here's why.

For eCommerce brands, dynamic content generation typically means getting Google to index filter pages (or PDP copy, which is a topic for another post!).

For filter pages, the content naturally comes from the products themselves, and properly tagged items automatically generate unique pages. For example, a Black Sofa page will show only black sofas, making the content unique compared to the main sofa page and other filtered pages like those for red sofas (provided the correct SEO is done on the URLs).

Why index filters?

The main goal is to create targeted pages that better match specific commercial search terms and, therefore, search intent or user experience. For example, without indexing filters, you have just one sofa page trying to target "black sofas" (3.3k searches per month), "red sofas" (300 searches per month), and "grey sofas" (1.4k searches per month).

When someone searches for "black sofas" and lands on a page showing only black sofas, it's a much better user experience than landing on a general sofa page where they must apply a filter.

This strategy extends beyond SEO—these dedicated pages can also enhance your paid search and CRM campaigns.

When you add up the search volume across all your product attributes, the numbers typically become compelling enough to warrant further investigation.

How do you index your filters for Google?

This complex question requires an SEO expert to determine the best approach for your specific platform.

You'll typically need a third-party extension and modified product tagging for Shopify.

Magento/Adobe follows a similar approach, though a skilled developer can create a custom solution.

Either way, both options often feel a bit 'hacky', and solutions often come with heavy compromises. Our biggest successes have come from headless or bespoke platforms, the main reason is control and flexibility. You can build something that fits your existing set-up.

Again, it takes a boatload of consultancy, scoping and auditing to ensure the filters are SEO-friendly and readable, but from our experience, the main errors we see are:

Focusing too much on making these pages accessible is usually easy—the hard part is getting Google to stop accessing and indexing them. Create rules based on URL structure or page elements that tell Google to stop.

Relying too much on client-side javascript. The page may look acceptable from a user perspective, but Google is reading the server-side content, which hasn't been updated.

Lack of strategy or control. For example, it may be fine to index colour across all product categories for a furniture brand, but you may only want size to be available for 1-2 categories and pattern available only for accessories. This is essential for marketplaces and large inventories.

I experienced infinite crawl loops at MADE.com. The developers created a short-term 'hack' for me that was heavily Javascript-focused. In short, it added a folder to the URL to make the content static and attempt indexing. However, it kept adding a folder to the URL, so Google kept crawling, and we had some very, very long URLs with duplicate content.

Finally… the most significant issue we see is businesses going too far or not thinking things through, and this usually comes from not getting an SEO to support. Here are a few examples.

When it goes wrong. Like, really wrong.

The worst example I've ever seen of mass content creation was actually not an eCommerce brand but a car repair company. They were called Who Can Fix My Car, and they were from the era of 2015-2017 (when the world was so much simpler)—hence why I've named them, as it's a completely different business these days.

In short, the marketing lead had been aware of the possibility of mass content creation during the RFP process. Rather than hiring SEO agencies immediately, he implemented the "quick wins" himself before bringing in an agency 6–7 months later. I was part of that agency and worked on the resulting project.

This was the first agency I worked at, so I was still a relatively fresh-faced SEO with no scars (yet)—this was probably my first major one. I wish I still had slide decks from this period, but the best I could find were some old notes I'd shared with my email (cloud-based note-sharing didn't seem to be a thing in 2015!).

My notes and references WCFMC had the following information on the website:

75 different types of cars (BMW, Audi)

100 different repair options (Clutch repair, Engine repair)

1,000 locations across the UK (Hull, Manchester)

Combining these attributes together shows that these can create 7.5 million URLs. This matches nicely with my notes from that time, which indicated the website had 8 million indexed URLs.

Google essentially got fed up with the website. There's a concept of "index bloat", which, in a nutshell, means Google will only spend a finite amount of time and effort on your website to access content. If you're the BBC, you're an authority, and therefore, it warrants having a massive website that Google takes extra time and effort to crawl. However, if you're WCFMC, it's the opposite. The issue was so bad that we couldn't even get Google to crawl deep enough to remove pages from the index, so we had to rely on the robots.txt to remove them. As you can imagine, the client had just seen 6 months of "growth," so another 6 months of stagnation didn't go down very well.

It took so long to remove these pages from the index and restore the site to a respectable level that the client eventually left, frustrated with the lack of perceived progress. What we discovered was that when they updated basic information like garage addresses, names, or new features, Google wasn't seeing or indexing these changes—instead, Google was still indexing content from over a year ago for deep garage pages.

This is further evidence that bigger isn't better. Some of our biggest (and quickest) SEO successes have come from reducing bloat and streamlining large websites with duplicated or templated content.

Aggressive lean

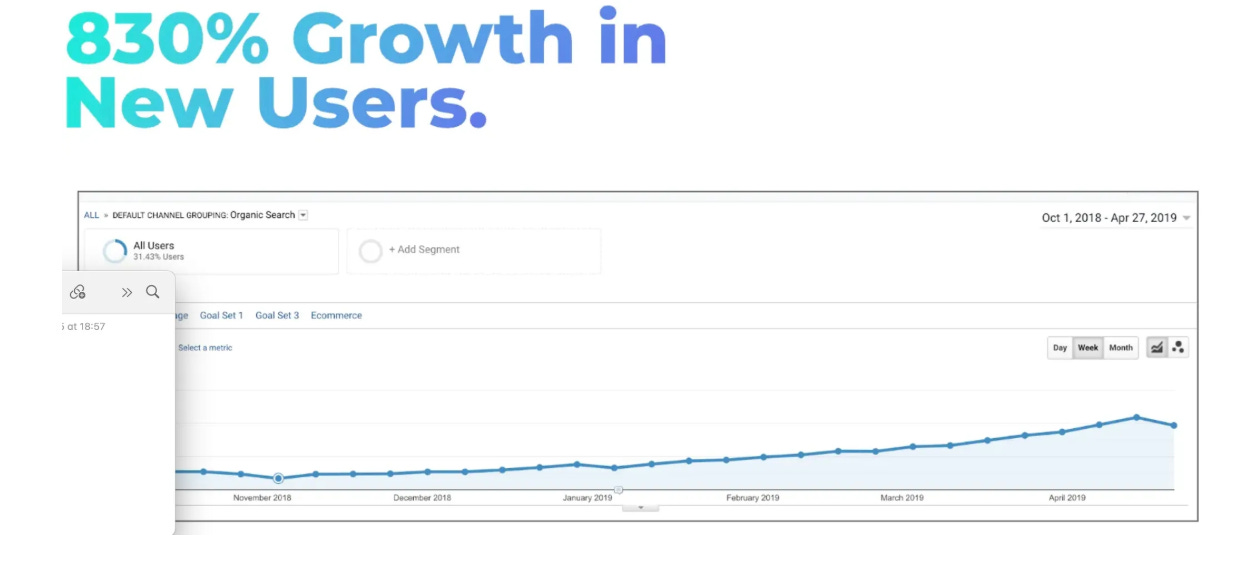

An old client of ours (Stasher), which I always enjoy referencing as the results couldn't have gone better.

Bloom & Wild: Location, Location, Location.

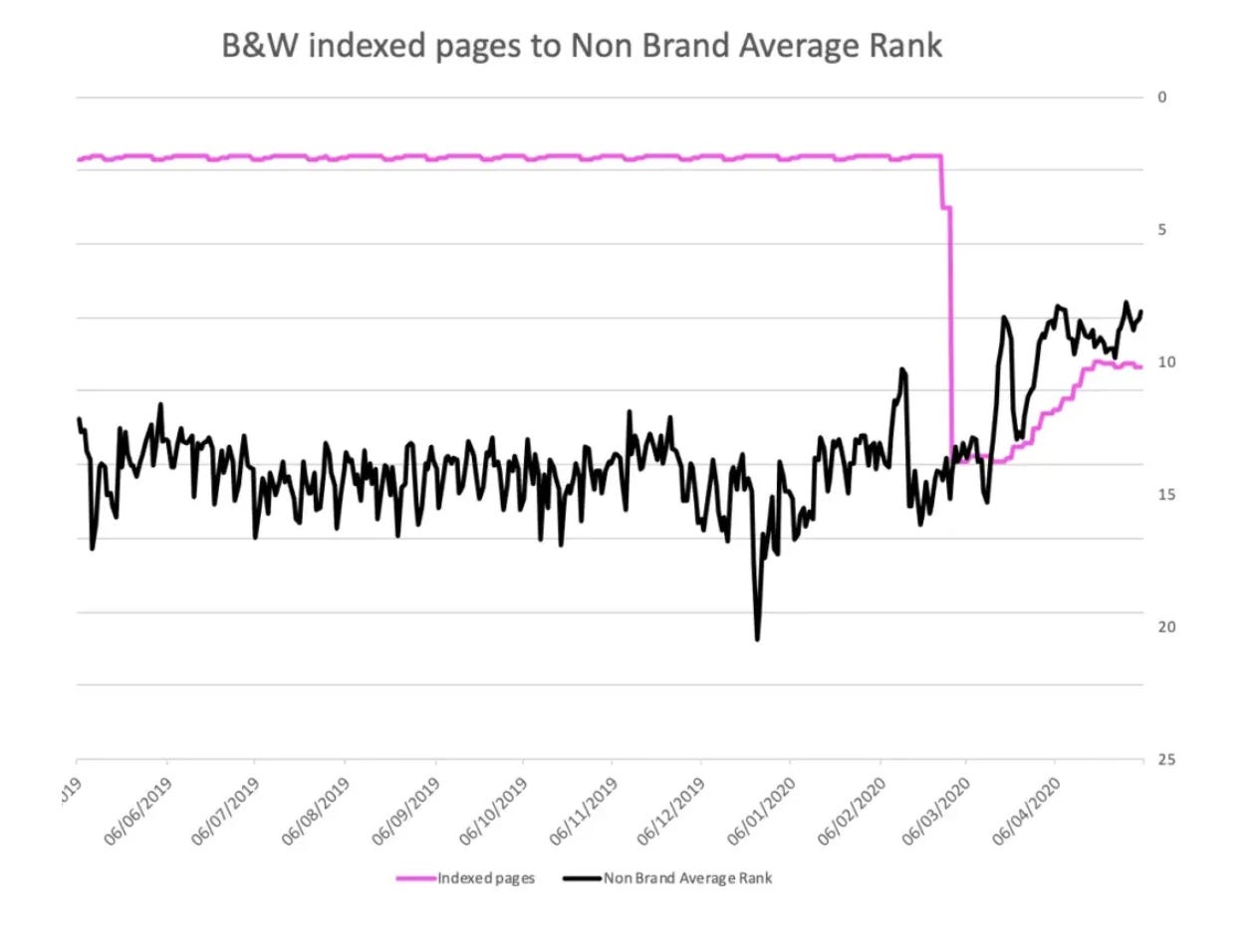

Years ago, Bloom & Wild adopted a mass content strategy that remained on their website as a legacy feature. It wasn't particularly effective, but the general thinking was that it wasn't causing harm, and since some pages were generating traffic, they were left untouched. Live example here >

Conclusion; or just don't do it.

It's easy to put together an opportunity analysis to prove the value of extra landing pages, and doing it at scale makes the numbers look even better.