Why PR is Now the Key to Training ChatGPT on Your Brand

From Awareness to Authority: How the traditional PR model is collapsing in the face of AI Search.

For years, measurement in PR has been about the number of pieces of coverage and clicks.

You’d create a strategy that earned coverage, which in turn drove consumers to your site and watch as awareness of your brand, traffic to your site and hopefully conversions poured in.

But that model has collapsed in the face of AI search.

AI for us all is changing how we discover, research, and ultimately trust a brand. This is forcing PR to evolve more than it has in the past decade.

The fight now is not just about securing coverage, it’s about building an understanding of your brand with AI systems, like ChatGPT and Perplexity.

The Old Funnel Has Collapsed

Traditionally, visibility has led to clicks. For eCommerce brands, all PR and SEO strategies hinged on driving people directly to their site.

There was more of an element of control with a PR strategy, you could tell a story, secure coverage & a link and build your pipeline from awareness through to conversion.

With AI search, that is essentially gone now.

Large Language Models (LLMs), like ChatGPT and Perplexity, are designed to give consumers the answers they need without needing to click through to a site.

This means that visibility now isn’t about how many people visit your site; it’s about whether AI understands and trusts your brand enough to surface your brand as part of their answers.

For PR, this fundamentally changes things.

AI Visibility Is The New Focus For PR

Currently, LLMs lean on a surprisingly small set of “trusted domains”. These domains are what you would typically expect if someone talked about a ‘high authority’ site, BBC, The Guardian, Forbes, to name a few.

One thing that is critical for PRs to understand is that strong coverage doesn’t automatically mean that you’ll be surfaced in AI search results, particularly if there’s no consistency behind the coverage you’re securing.

This is because LLMs don’t simply crawl for links; they learn patterns across the web. Who talks about your brand? How consistently is that conversation happening, and in what context is all of this happening? LLMs study tone, brand sentiment and context to decide whether your brand should be surfaced, whether it’s relevant and most importantly, if it’s credible for a given topic.

Put simply, AI learns who you are based on all of the stories about you online.

So what does that mean for PR? We’re no longer just a channel for awareness; we’re a channel that drives AI understanding.

The Jump PR Must Make

The jump to AI search is here, even as consumers now recognise it as part of how we source information, with ChatGPT alone enjoying around 800 million users per month.

In terms of PR, for years our impact has been measured in specific ways - volume of coverage, coverage quality and more SEO-led metrics like traffic. Those things do still matter, but they need to be assessed through a different lens now.

Now, visibility in LLMs is earned through a combination of:

1. Relevancy - Are you consistently being mentioned in the right context within your niche?

2. Credibility - Are the mentions you’re securing coming from trusted sources?

3. Consistency - Are you appearing often enough so that AI can confidently connect your brand to key topics and conversations in your niche?

It’s no longer just about moments, PR rewards patterns and consistency. Brands must understand this and take seriously how it can positively impact visibility.

You can’t just go viral once with a single campaign and expect AI to remember who your brand is and what it stands for. It’s the consistency of repeated mentions that teaches LLMs who you are - this is what begins to drive long-term visibility.

How PR Needs To Evolve: The SEED Framework

Our proprietary SEED framework offers clarity to eCommerce brands, helping them navigate the constantly evolving landscape of AI search by focusing on key areas.

But how can we incorporate the SEED framework into our PR strategies?

S - Situational Relevance

PR earns coverage that builds topical authority in the correct context for your brand.

AI understands topics by category, so your PR strategy needs to build authority across different publication types depending on the personas you’re targeting.

It’s important to remember that your audience will differ, so understanding the different personas you’re targeting is crucial. The sources cited for one persona will vary from another, as different people consume various types of media; therefore, the sources that hold the most influence will also differ, and cited sources will vary depending on the platform.

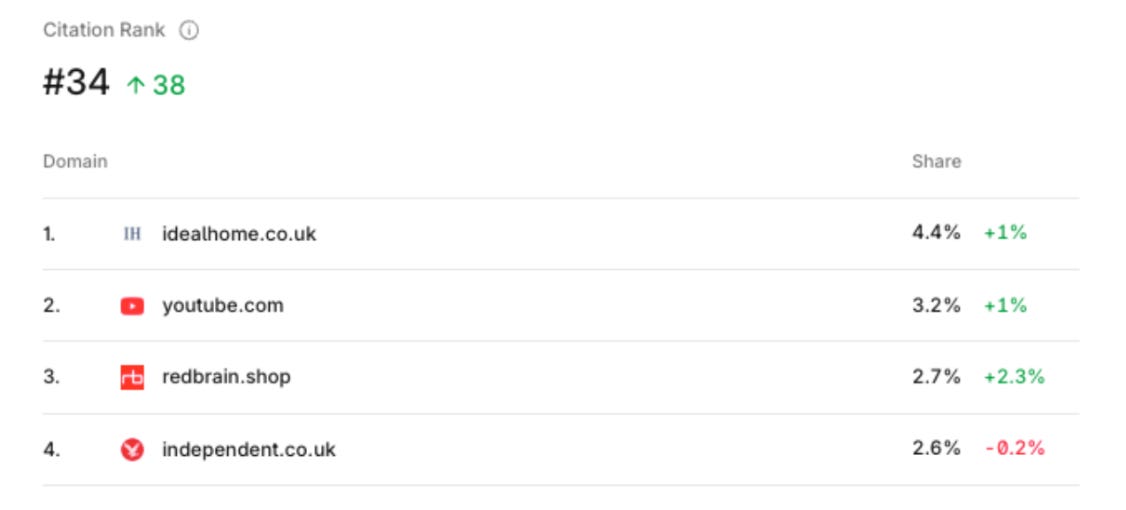

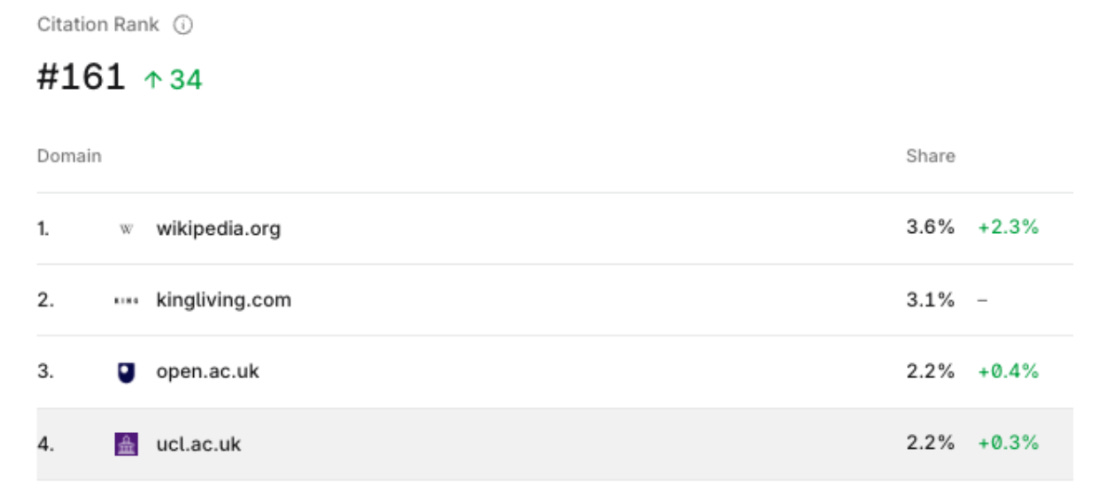

Below shows two different anonymised personas for a brand in the interiors space, which showcases the differences between the types of cited sources depending on the given persona.

Persona A

Persona B

The domains being used as citations across the different platforms demonstrate the importance of having a varied PR strategy that targets a multitude of different publications. In short, AI understands the nuances of different personas by category, so should you.

E - Expert Led Content

PR offers the natural opportunity to showcase to the world, and to AI, your expertise and credibility as a brand

Leveraging expert commentary and combining it with real-life stories, authenticity, and trust offers AI the most valuable signals for your brand. Over time, consistent expertise helps AI differentiate your brand from your competitors.

E - Enriched Product Coverage

Strong product-led coverage, whether affiliate or not, helps AI understand what you sell.

Securing coverage in top-tier product round-ups allows the opportunity to share key product information - price points, key features and the benefits of your product - all of these things send the right signals and reinforce your brand to purchasing intent from your audience.

Product coverage via a PR strategy not only has the benefit of teaching AI who you are, but it also gives the opportunity to directly tell your audience about your products.

This will help your products to gain the visibility needed for the bottom of the funnel, more commercially led terms.

D - Distinct Brand Message

Consistency of coverage here allows you to tell your audience and AI what values your brand has and exactly what you stand for

Your PR strategy should tell a clear story about who you are and what defines you.

Securing coverage in trusted sources consistently builds a consensus of your brand messaging with AI, and it’s clear that earned media is what is driving the large majority of citations.

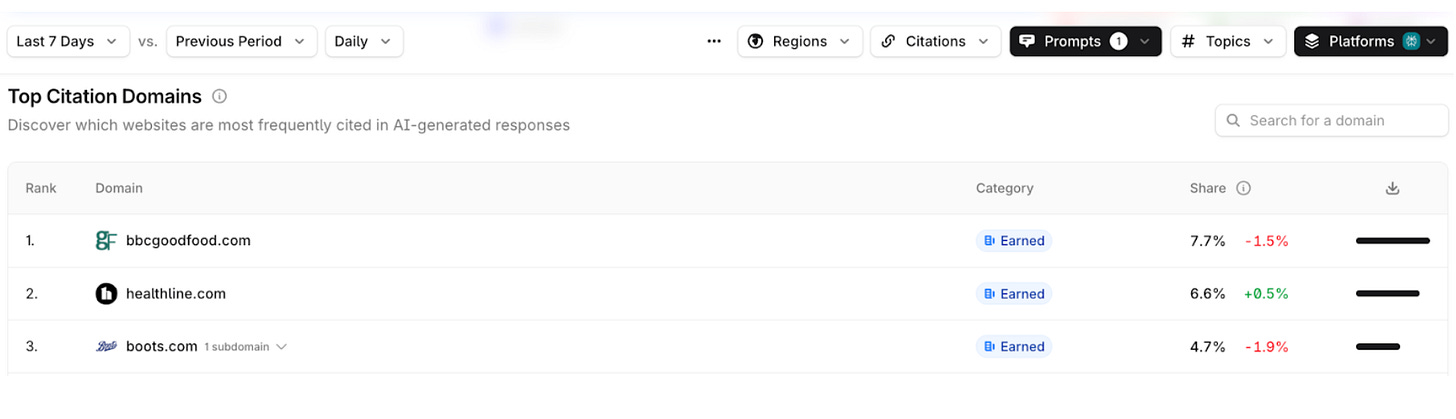

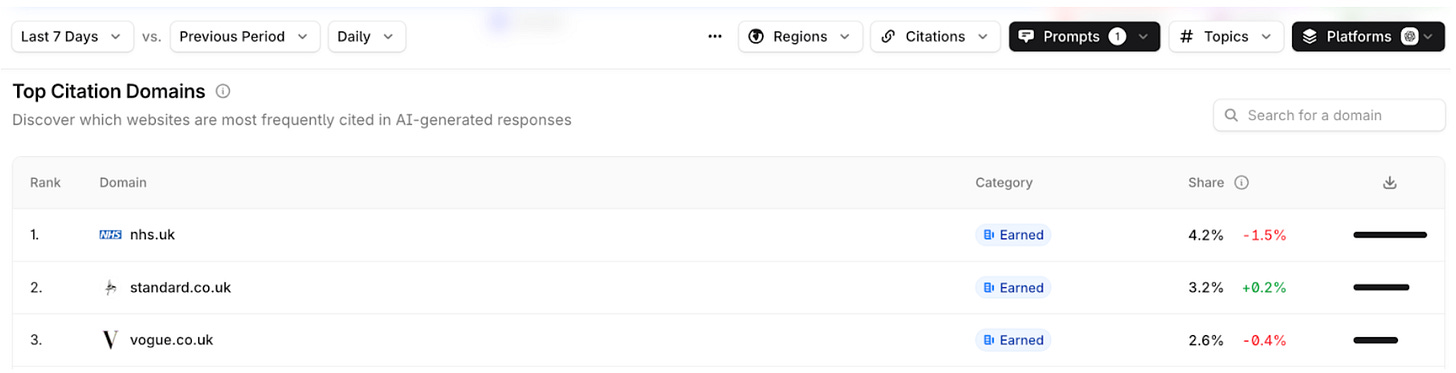

AI, though, is not one single platform. It’s becoming increasingly evident over time that each model cites sources differently. As an example, ChatGPT and Perplexity do not prioritise sources in the same way. See below an example from a British nutraceutical brand:

Perplexity leads with sources from BBC Good Food, whereas ChatGPT takes a far more formalised approach to the authority it prioritises:

No brands in sight, they see the NHS website as their main source of truth, alongside two hugely reputable news publications.

This is evidence that two models source their information differently, which showcases clearly how a well-rounded, consistent strategy needs to be in place.

Typically, traditional PRs depend on strong contacts, which eventually lead to repeated coverage - all the examples cited throughout the SEED framework demonstrate that this approach isn’t effective with LLMs - you need to adopt a content-led strategy for diversified coverage.

Where Does Brand Sentiment Fit In?

Brand sentiment has often been seen as a softer metric - particularly for SEOs - something to monitor and be aware of, but not something that could necessarily be optimised in search for performance.

But as LLMs evolve, it is clear that brand sentiment is becoming a strong signal.

A positive, consistent brand narrative reinforces not only who you are as a brand, but why you can be seen as trustworthy and reliable.

Anything negative or inconsistent creates a level of ambiguity with LLMs, and that puts up a barrier for your brand being a cited source.

This is why sentiment now underpins relevancy and credibility. As an industry, PRs used to take the line of ‘all press is good press’; this is now fundamentally not the case. Negative brand sentiment will impact your brand’s ability to be surfaced for answers with AI.

The good news? How humans view brand sentiment is the same as an AI algorithm:

Positive sentiment builds a level of trust

Trust drives authority around key topics

Authority then fuels visibility

What Does Success Look Like For PR In An AI-First World?

Success for PR in a changing PR driven landscape is going to look different to the traditional report PRs have engaged in for many years.

It’s no longer going to be about the Domain Rating (DR) of coverage or the number of backlinks you build for a specific campaign; it’s about whether your brand is being consistently cited and understood by LLMs.

The key questions to ask yourself when trying to understand whether your PR strategy is working:

Is the coverage you’re securing relevant to your key target categories?

Are we appearing in the same places as our competitors? Or, are you dominating the conversation in your industry?

Is your brand messaging consistent across all of your owned, earned and social channels?

Does the overall strategy offer AI the opportunity to build consensus around your brand, your brand values and the products you sell?

When you begin to achieve the above and have confidence around these questions, that’s when your brand will start to be understood by both your human audience and by AI.

The Opportunity for PR

This shift isn’t a threat to PR; it’s the biggest opportunity we’ve had as a channel for years.

We know that a brilliant PR strategy can directly impact how AI understands a brand. That means that PR doesn’t just sit at the top of the funnel trying to drive awareness admirably; we’re an integral part of how consumers make their purchasing decisions.

With any change comes a challenge; in theory, this is a massive opportunity for PR as a channel and one that needs to be grasped, but the challenge must be understood in equal measure. Patience is required as building consistency isn’t easy, but following the right processes for your brand will help you perform brilliantly in an AI-driven search world.

Aligning key campaigns and your overall strategy with key categories, tracking the right metrics and communicating your brand’s values are all going to be key in helping AI understand who you are as a brand.

AI is changing how people search and how people purchase, but it does not change the fundamentals of good marketing. Relevance, your expertise and positive brand sentiment have always been the key to building a high-performing brand, and they will be the key to doing the same for AI.

Want to stay in the loop?

The point about ChatGPT and Perplexity citing different sources for the same brand is fascinatin, really shows how you can't just optimize for one model. Your SEED framework makes sense but I'm wondering about the practicle challenge of maintaning that level of consistency across so many publications. For smaller brands with limited budgets, is there a minmum threshold of coverage that starts to teach LLMs effectively, or does it really require the kind of volume your examples show?